Cost – understanding compute

As previously discussed, ensuring that AI solutions provide genuine value in international development requires careful cost-benefit analysis. In resource-constrained settings, AI must offer a clear advantage over existing methods, justifying both development and operational expenses.

The model developed by Rara Labs significantly reduced the cost of validating errors in NWASH data. In an experiment on a standard workstation, the model processed 200 samples in under 30 seconds, whereas a human expert required over 30 minutes. This efficiency allows specialists to focus on strategic decision-making rather than time-consuming manual validation. However, assessing the true cost-effectiveness of AI must go beyond efficiency gains—it requires considering both upfront development costs and long-term maintenance expenses, as well as potential wider benefits, such as improved data accuracy, which may further justify investment.

The cost of developing AI solutions is influenced by several factors, including:

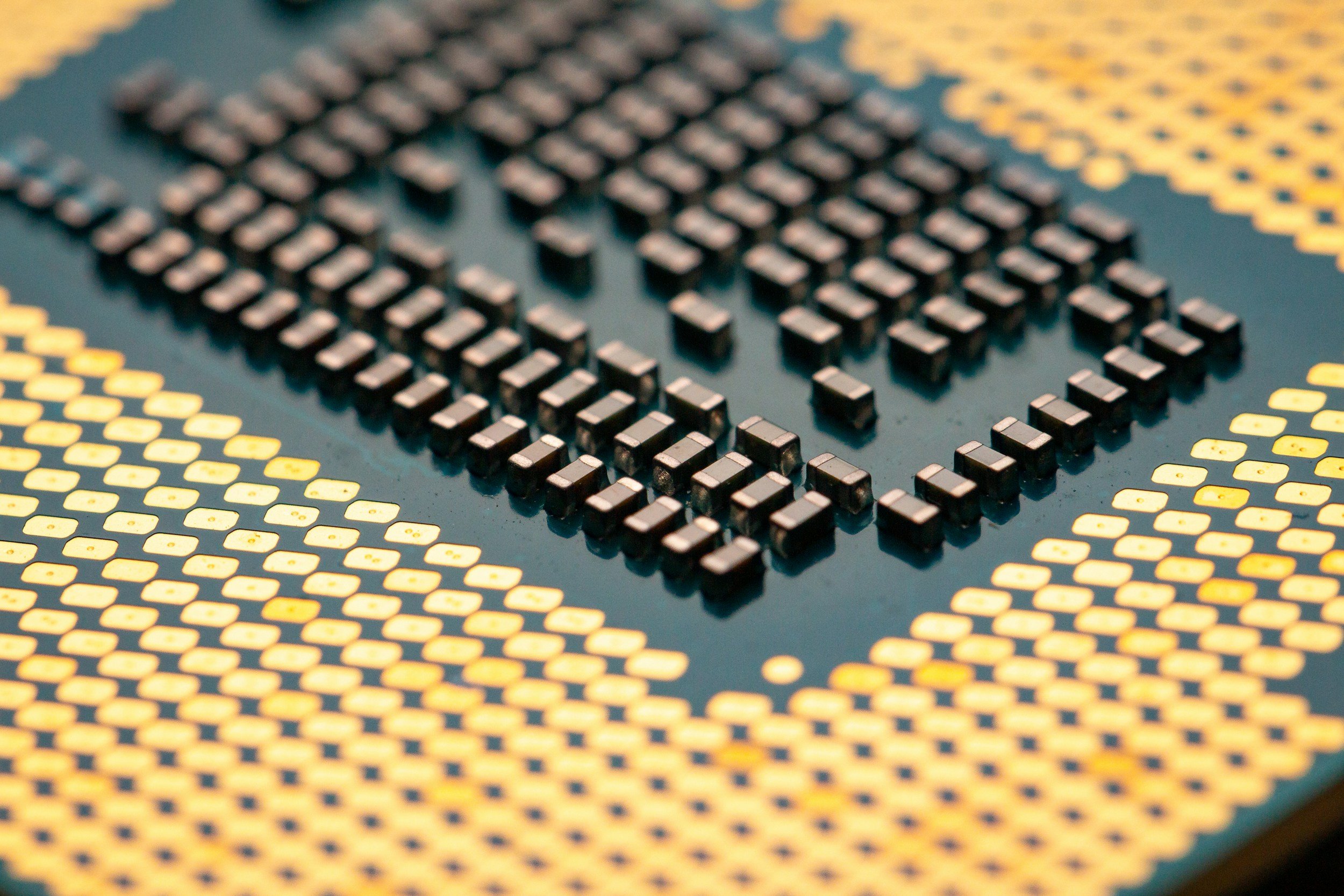

Hardware: the infrastructure needed to collect, store, and process data.

Human expertise: the cost of AI engineers, software developers, and domain specialists.

Compute resources: the energy and processing power required for training, fine-tuning, and running the model.

For example, in the Early Warning Forest Fire System, a major challenge was developing a cost-effective data collection strategy. While the dataset was readily available, compute costs remained a key consideration. Recent advancements, such as model distillation (reducing large models into smaller, more efficient versions) and hardware optimisations (leveraging AI-specific chips like TPUs), offer potential pathways to lower costs without sacrificing performance. As AI adoption in development settings grows, balancing computational efficiency with accessibility will be critical to ensuring sustainable, cost-effective deployments.

“there must be a clear case that the value-add being provided by AI outweighs the cost of developing a tool…”

What is compute, and why is it so important to developing AI use cases?

When any application is run on a computer it requires computational resource (storage, processing power, memory) to function. Compute is a measurable quantity of the computational resource used to perform a specific task. The storage of training data, fine-tuning of systems, and day-to-day use of AI systems all require compute to function (AWS, n.d.).

There are broadly two ways in which this compute can be accessed:

-

Organisations can purchase their own physical servers, which can be used to store data and process applications which make use of that data.

-

Alternatively, companies like Amazon, Google and Microsoft allow organisations to rent computational resource, on demand, via the internet (Michal, 2024).

Rara Labs confirm that the cost of compute is highest during the training phase. The process of finetuning an off the shelf algorithm to a specific use-cases requires a significant amount of compute to process. And, if your fine-tuned model fails to achieve the intended outcome, you have to go back to the drawing board and re-start the training process. Once the model is built, the cost of compute needed to run it on a day-to-day basis reduces.

As we’ve explained previously, creating a use-case which is well suited to the context and capable of delivering high levels of accuracy over time is a continuous process. The training data doesn’t provide an immutable foundation for a tool which can be readily adapted to changes in the demands of the real-world use-case, but rather a snapshot of a specific time. As the context changes, new data needs to be collected which can represent those changes and feed them into the structure of the system. A Mckinsey study exploring one hundred cross-sectoral use-cases found that at a least a third of use-cases required a monthly update of data, and just under one in four use-cases required a daily refresh (Chui et al., 2018).

Though the cost of compute is most intense during training and drops off after the model has been developed, there are ongoing compute costs which come with the long-term optimisation of the model, through continual training and adaptation of the solution.